Websites often suffer drops in organic traffic, these can be caused by updates in Google’s algorithm or because the content or other elements have been changed on the site.

However, it’s not always easy to understand how and why a website has been affected.

I’ve dealt with many sites that have suffered drops and have created a process and checklist to uncover why it’s happened and create actions to try and remedy the situation.

I cannot promise you will always find a how and why, but this checklist will guide you to the right areas to check.

Here is the checklist in Google sheets which you can copy: https://docs.google.com/spreadsheets/d/1So3mlPdxVmvHJeZ7IjwzzBWOipbxk7QODgzB8q3iZuI/edit?usp=sharing

Below is a guide to the process. You can use the ‘ Jump to Section’ to jump to specific part.

Jump to Section

1. Validate The Drop

Before you spend time investigating a possible drop in traffic, first you need to validate there actually was a drop in traffic and not an error in reporting or yearly traffic fluctuations.

Here are the steps to validate

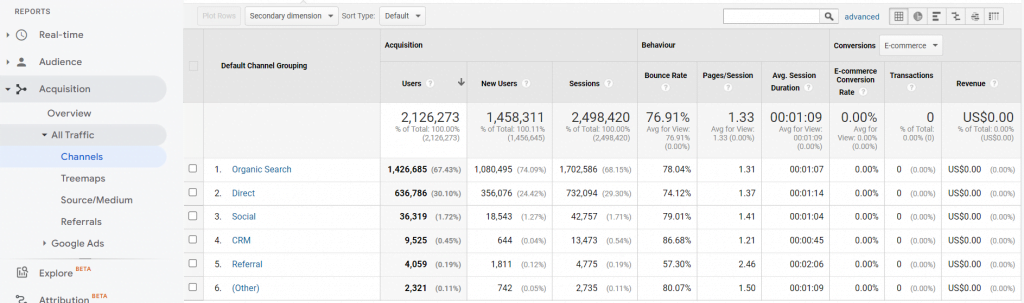

1.1. Do you see the same organic traffic drop in both GSC and GA?

As there is a few days delay in data coming into Google Search Console (herein referred to as GSC), Google Analytics (or another analytics platform) is usually the first place people notice a drop in organic traffic.

If there is a drop in GA traffic, my advice is usually to not panic to start with and wait till the data catches up in GSC.

I have had many experiences where a suspected drop in organic traffic is actually an issue in Google Analytics tracking.

A few things I have experienced are GA/GTM tag has gone missing or incorrectly implemented after a website change, GA/GTM tag has been changed to a different account, traffic is being attributed to an incorrect channel, and GA isn’t tracking AMP traffic for a period of time.

I once had to cut a holiday short as a site was losing 80% of traffic. Once I investigated, I discovered that organic traffic was being attributed to a different channel. Don’t let this be you, enjoy your holiday and validate the drop!

If you cannot use an analytics platform, you can also validate a drop in traffic in server logs.

1.2. Is the drop only for organic, or also other channels?

This is another check to do in GA, check if there is a similar drop in other channels.

If you see a drop in traffic for other channels, then it’s unlikely it’s simply an issue with organic search and possibly a website/tracking issue, or seasonality.

When checking other channels, direct is not a good channel to compare. In my experience a large section of direct traffic is actually misattributed organic traffic. This could be due to people using ad blockers which block GA tracking scripts, issues with the script firing or some other issue.

1.3. Is the drop caused by seasonality?

Drops in organic traffic can be caused by seasonality. Examples in the western world are Christmas & NYE and those should be obvious. However, there can be other holidays (especially if doing international SEO) or other events specific to the niche of the site that can cause dips in traffic.

Always check back at least two years (if such data is available) for similar dips in traffic. Some holidays and events fall on different dates each year (Ramadan as an example), so don’t compare the exact dates, but look for trends around the same dates, both before and after.

Additionally, if you are new to the business, ask other depts if such a dip in users is expected around this time.

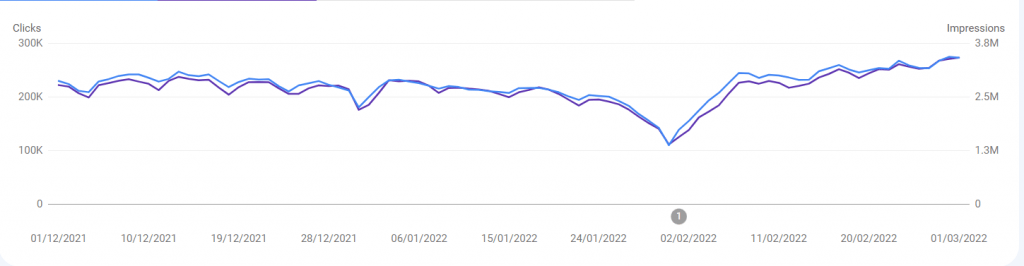

1.4. Does it appear there has been a Google update recently, either confirmed or unconfirmed?

If you’ve disregarded the possible causes from previous points and are confident that it’s only organic traffic which is dropping, next is to check if there has been a Google update around the time.

Google makes 1000s of tests all year, so it could be one of the many unannounced changes. However, every 6 months or so Google makes big changes to their algorithm, known as Core Updates. These are announced as they are changes which will have big noticeable effects on the search results.

There are also other ad-hoc changes that Google will officially announce, that usually target specific areas. Such as page speed, user experience, or a specific type of content such as review sites.

There are a few different resources to check for possible Google updates, these are:

a. https://twitter.com/searchliaison This is an official Twitter account from google to share news and insights When a core update, or a specific update is launched, it will be announced here.

c. https://www.seroundtable.com/category/google-updates seoundtable is a website which reports on the goings-on in the world of search and is updated multiple times a day. The page linked above covers all google updates, announced or not and is probably the best place to understand if changes are going on in Google.

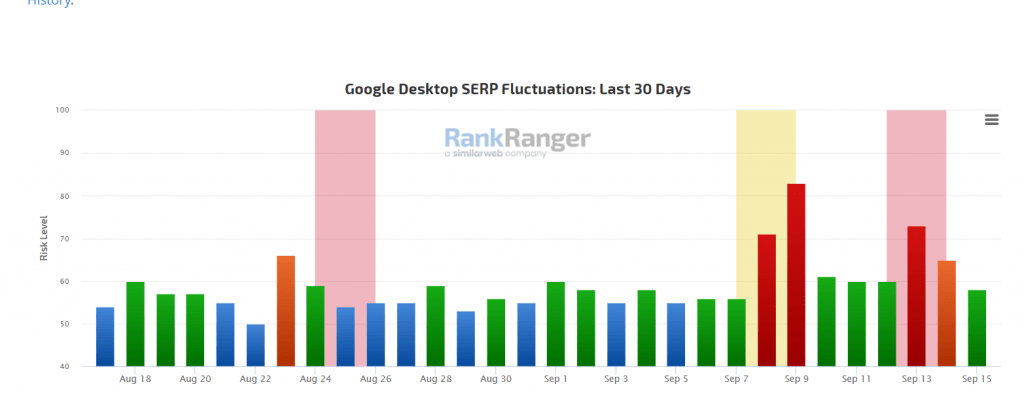

d. SERP fluctuation trackers.

There are many tools which attempt to track changes in Google’s daily search results. They track a database of keywords and monitor the search results every day. When there are significant changes in the rankings of many websites, this can be a sign a change has been made in Google.

Here are the ones I’m aware of at the moment:

https://moz.com/mozcast

https://algoroo.com/

https://serpmetrics.com/flux/

https://www.advancedwebranking.com/google-algorithm-changes/

https://www.rankranger.com/rank-risk-index

https://www.accuranker.com/grump/

https://cognitiveseo.com/signals/

https://www.semrush.com/sensor/

https://www.ayima.com/pulse/

https://serpstat.com/projects/search-engine-storm/

https://www.serpwoo.com/stats/volatility/

https://app.sistrix.com/en/google-update-radar/uk/mobile

https://mangools.com/insights/serp?ref=menu-sw

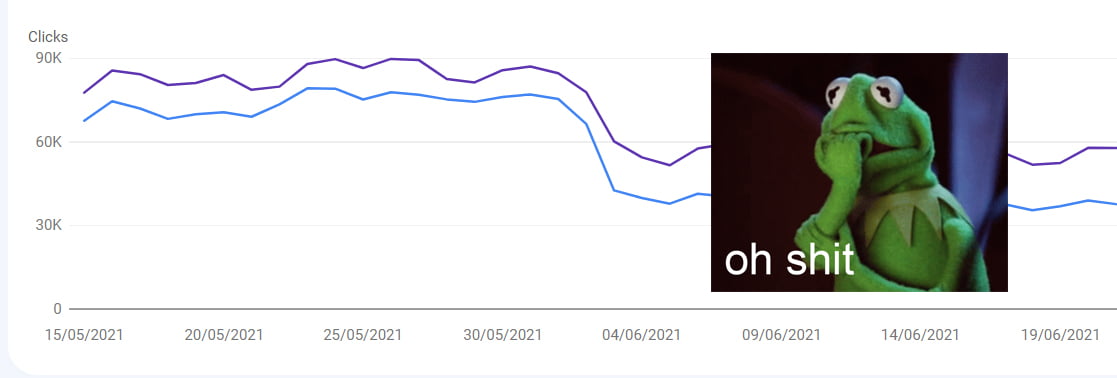

2. What Caused the Drop?

When your organic traffic drips, it can be caused by a few different things, such as keyword rankings, number of keywords ranking and CTR. It’s important to understand what caused the drop, so you can do a correct analysis further on in the investigation.

You should ideally get this data from Search Console. If you are using data from a rank tracker, you’ll only have insights for the keywords you manually added to it or the keywords it automatically picked up – it won’t be all the keywords you are getting clicks or impressions for.

In Search Console, you are limited to the first 1000 keywords and URLs. If your site only gets a modest amount of traffic, you can use the GSC UI, or export the data. If you are getting more than 1000 keywords (even most small sites will be), GSC has an API to extract all the data.

The easiest way to do this is via the fantastic Google sheets add-on, Search Analytics for Sheets: https://searchanalyticsforsheets.com/

Using this you can easily extract all the data for keywords, pages, clicks, impressions, and CTR going back 16 months.

When I’m analysing a drop in traffic, I like to wait 1 week after the drop. Then I will compare 7 days just before the drop, with the same 7 days just after the drop. This ensures you’re analysing like-for-like data which is important – you don’t want to be comparing a day with high traffic, vs a day with low traffic, such as a Monday vs a Saturday.

2.1. Was there a drop in keyword ranking positions?

A drop in organic traffic can be caused by a drop in your avg. position for the keywords sending traffic – If you’ve dropped from position 2 to position 8, that’s going to result in a drop in traffic.

This could be for all keywords or only a selection of them.

So, if using Search Analytics for Sheets, extract all the keywords sending clicks and their avg position before and after the drop. Then compare the same keywords to see how they changed position after the drop.

2.2. Was there a drop in the total number of keywords ranking?

I’ve seen people asking for advice during a traffic drop saying that their avg position and CTR are stable in the GSC UI, yet they are losing traffic.

This can happen when you have dropped for the total number of keywords sending traffic, so your avg. position and CTR may seem stable, but fewer keywords are sending traffic.

Pull the data with Search Analytics for Sheets and total up the number of keywords sending traffic before vs after the drop.

Sometimes, main head keywords will be unaffected, but many longtail keywords may have been lost, which can ultimately make up a large amount of the traffic.

2.3. Was there a drop in CTR?

If you have analysed your rankings and total keywords sending traffic and they seem stable, the cause for a drop in traffic could be that the CTR (click-through rate) has changed.

Here, you should collect the keywords and their pages where a significant CTR change has been noted.

3. Where Was The Drop?

When a site sees a significant drop in traffic, sometimes it may only be on a subset of pages, however, sometimes it may be across the entire site.

We need to gather this data for further investigation in the next steps.

3.1. Was it category/page type specific, or across the entire site?

Using the data pulled in Search Analytics for Sheets in the previous steps, segment the data via the different areas or page types of the site.

Was the drop focused on certain areas of the site or certain content types, or did the entire site suffer?

3.2. Did the pages drop rankings for all search terms or only some?

Analyse keywords that caused a drop in traffic and the pages they led to.

Now check the other keywords for the same pages.

Did the majority of keywords for the pages also have a drop in rankings, or was it only for a small selection of the keywords that send traffic to the page?

4. Why Was There a Drop?

OK, now we’ve validated there is an issue with organic traffic, we’ve gathered the data on what caused the drop and we have the data on where the drop happened on the site.

It’s now time to try and understand why there was a drop to find actions to remedy the situation.

No evidence of a Google update.

The first 7 checks here are generally if you don’t believe there has been a Google update, announced or otherwise (Step 1.4). If there is no Google update, it’s most likely caused by a change to page content or some technical issue on the site.

So, these start off with some quick sanity checks, then go into some deeper technical checks.

Some of these are quick checks, but some will need deep manual analysis which will take time.

For large websites it won’t be possible to do some of these checks for all pages, so you will have to do spot checks, or prioritise pages that had the biggest losses.

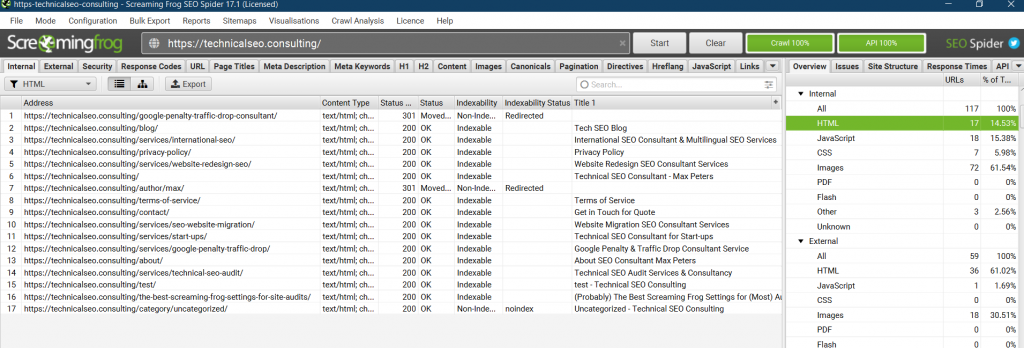

4.1. Are the affected pages still indexable and indexed?

This is a sanity check and one that can be automated pretty easily. Once we have a list of pages which have been affected in steps 2 + 3 we want to quickly check if these pages can still be indexed.

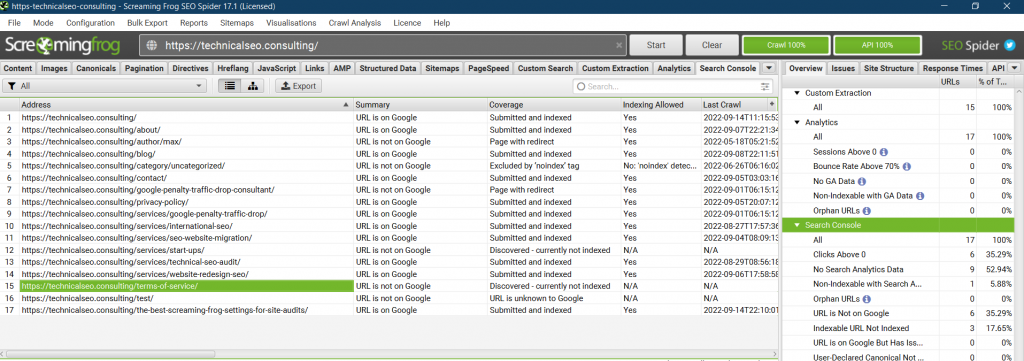

For a small number of pages, you can check manually, but the easiest way is to use Screaming Frog in list mode, paste in all the URLs to gather all the elements we want to check.

Which are:

- URL is 200 status. It is not a 404, soft 404, 5xx, or some other status code.

- URL does not redirect to a different URL

- URL is not set to noindex

- URL is not blocked in robots.txt

- URL is not canonicalised to a different URL (The canonical tag is self-referencing, or doesn’t have a canonical tag)

Additionally, even if they are still indexable, they may no longer be indexed in Google.

Again, using Screaming Frog in list mode, plugin the GSC account for the site and enable the URL inspection setting. https://www.screamingfrog.co.uk/how-to-automate-the-url-inspection-api/

This will pull the status of the URLs and tell you if they are currently indexed in Google.

(The api is limited to 2000 URLs a day, so you may have to do in chunks if checking a large amount of URLs)

4.2. Are the affected page’s titles the same as pre-drop?

We know page titles can have a strong effect on a page’s ranking and also on CTR, so another sanity check is to see if the page titles changed during the drop.

If it’s not obvious to you if the page titles have changed try:

- If using WordPress, you may be able to do this via the history module.

- If you have old crawls in site crawler , use these.

- You may be able to find the page at the https://web.archive.org/

- Try Google cache if it hasn’t been updated

Nick from SEO Testing also points out that Google may be changing titles itself in the SERPs, which could also effect CTA. Google often does this if it thinks it can make a better title than what is already defined.

More info on title changes here: https://moz.com/blog/ways-google-rewrites-title-tags

4.3. Is the affected page’s content still the same as pre-drop?

If the content on the pages has changed recently before the drop, this is one of the most obvious reasons for a drop in traffic.

These could be pages that have been refreshed or re-optimised for SEO, or there could be a bug that has changed the content.

If it’s not obvious if the have changed try:

- If using WordPress, you may be able to do this via the history module.

- You may be able to find the page at the https://web.archive.org/

- Try Google cache if it hasn’t been updated

- Ask the team responsible for content on the site

4.4. Did Anything else change on the site?

Many other changes to a website can affect SEO performance, such as site structure (URLs, internal liking), design, layout, CMS, Frontend plus many other changes.

If you know you have recently changed such elements, that can often be the reason for a drop.

If you aren’t aware of any changes, communicate with your developers, or product team and check into what releases happened around the time of the drop (before, not just after).

Get a full list of all changes that happened just before, even if they would not seem significant. Analyse these changes and try to understand if they may have had an impact.

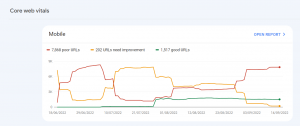

4.5. Any new or increase in issues in GSC?

In GSC, check the different reports to see if any of the affected URLs are listed.

Such as in the Coverage, sitemaps, page experience, Core Web Vitals, and mobile visibility sections.

4.6. Audit the site for technical issues

I’d leave this for last, as it’s the task that’s going to take the longest time to do properly.

If everything else seems fine & there is no evidence of a Google update, there is possibly some technical issue that has caused a drop.

Now, you just need to find it!

Need some help on technical SEO audits? Then check out these great resources:

- Free checklists and resources: https://seosly.com/blog/seo-audits/

- Free Technical SEO Course: https://www.bluearrayacademy.com/courses/technical-seo-certification

- Paid Technical SEO Course: https://marketingsyrup.com/

Or Hire me! Technical SEO audits

Evidence of a Google update.

The next checks are generally used when it appears that a Google update happened during the drop in traffic. Either announced or due to observed fluctuations being reported in the industry or tracking tools.

Changes caused by Google updates, especially Core Updates, are often based on the perceived quality of the page’s content.

A drop during a Core Update wouldn’t likely be caused by technical issues that the site already had.

So, these checks are based on analysing your content, keywords and your competitors.

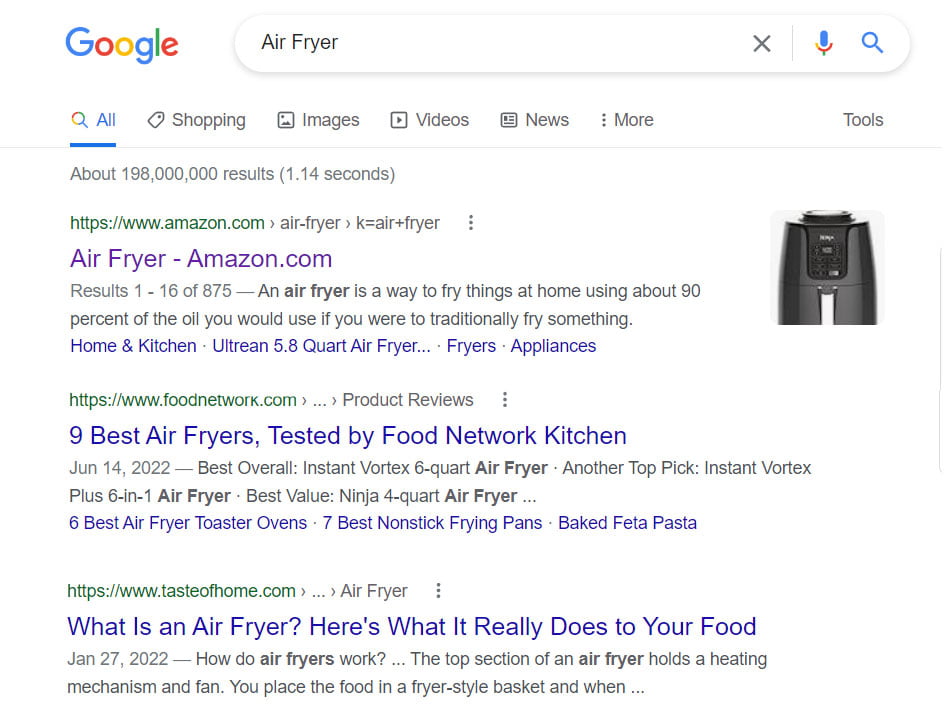

4.7. Does the intent of the dropped keyword/s match the content of the page?

So, we have the data for the keywords and the pages that have dropped in traffic, now it’s time to start analysing the site content and the SERPs manually.

Spot checking pages and the keywords they have dropped for, assess if the intent of the keywords matches the page intent.

During core updates, Google will sometimes do ‘intent shifting’, where it changes what it thinks people want to find in the results.

An example of this can be seen for the search term ‘Air Fryer’. At the time of writing, the SERPs are a mix of intent.

There are results for eCommerce category pages, review sites, & information sites. During a Google update, this could change and Google could, for example, change all the results to eCommerce sites – then any review or informational pages would lose traffic as they fall from the 1st page.

So, look at the keyword and the pages which have dropped, analyse the current SERPs and see if the intent Google perceives people are looking for, is the same as your page.

4.8. Does the page content of affected pages answer the question the dropped keyword/s asks?

Here you need to spot-check keywords and pages that have dropped and assess if the content really answers the question the keyword asks.

This can be difficult if you are the person who has created the content or been involved in the optimisation of it. Try to be impartial and think like a user landing on the page. If you had searched for it, would you be happy with the search result, does it satisfy you?

I have seen situations before where the assessed content does answer the search term in a roundabout way, but maybe it was too fluffy, not direct enough, or didn’t answer the question until after 1000 words of introduction.

4.9. Who is ranking above us now that we have dropped?

SEO is generally a 0-sum game, meaning if a site increases in rankings, other sites must drop at the same time.

Using the keywords and pages you have collected that have dropped, look at the SERPs and see who is now ranking above you.

To do this, you don’t need to know who was ranking exactly where before, but if your site has dropped from avg position 3 and now you’re in position 9, simply look at the sites above you.

We want to collect a sample of keywords your pages have dropped for, the pages now ranking above them for the analyses in the next step.

4.10. Do the pages ranking above answer the question better, have higher quality or a better experience than your page?

With the pages we collected in the previous step, it’s time to analyse our content vs the competitors to try and understand if their content better answers the question, has better quality content, or has a better user experience.

Again, this can be difficult if you’ve created the content, sometimes It’s hard to be impartial.

A practice I have used before is asking another team member from a different dept. to read blind samples of the content and tell us which is better. You can do the same, ask a friend or colleague, or even in SEO communities, such as on Reddit or Twitter.

Some things you can assess for

- Does the content answer the question the keyword poses?

- Quality of content and does it follow Google Best Practices? Aleyda Solis has curated all the questions Google has mentioned over the years on quality content, get the checklist here: https://twitter.com/aleyda/status/1560550093941456896

- Quality of overall website

- Page experience (pop-ups, good UX, etc)

- Page Speed

- Perceived E-A-T of the page and the site overall:

https://developers.google.com/search/blog/2019/08/core-updates#get-to-know-the-quality-rater-guidelines-and-e-a-tWant to learn more about E-A-T, checkout these great guides:

E-A-T: Expertise, Authoritativeness, and Trustworthiness

What is E-A-T?

4.11. Winner vs losers

During Google updates, you will sometimes find that although some keywords/pages have dropped, other ones may be stable or have even increased.

This is because rankings are largely based on a per-page basis, rather than penalising an entire site (Although this can happen in some circumstances, such as a backlink penalty).

If this is the case, we can analyse this to try and uncover why.

During the analysis we did in part 2, we can gather any keywords/pages that were stable, or even better, increased, and analyse them in two ways:

a. External winners vs losers

Follow steps 4.8 – 4.10 and do the same analysis, but this time try to understand why your pages may have leapfrogged your competitors.

If you can come up with some theories about why you might have (better quality content for example), you can then use your ‘winner pages’ and compare these to your ‘losers’.

Is there anything different between these pages? Perhaps the content or some other element is better.

b. Internal winners vs losers

If you have some pages that were stable or increased, compare these pages to your pages that dropped.

Again, look for anything that sets the winners vs the losers apart, is there any correlation between the groups, better content, newer content, better experience, etc?

The point in doing a winner vs losers analysis is to see if there is anything you can learn from the groups, then apply the changes to the loser sets of pages.

4.12. Did your pages/site lose any backlinks?

Backlinks can still be a ranking factor, so it can be useful to check in during a traffic drop to see if anything has changed.

Don’t look at the exact date of the drop, but look at the trends leading up to it. Did your site or the specific pages that dropped lose backlinks leading up to the drop?

4.13. Did the pages/sites now ranking above us gain any backlinks?

As well as looking at your backlinks, you can look at the pages that have leapfrogged you in the SERPs.

Did the sites, or their specific pages, start to see a trend of more backlinks or higher quality links pointing to them leading up to your traffic drop?

Let me know if you have any comments, or suggestions to improve the process.

[…] What to do? A Process and Checklist for Website Traffic Drop Investigations […]